Moving your data beyond Excel.

Embracing Uncertainty

There is so much uncertainty in the world around business, safety, and risk. Everything that is in the future has an element of unpredictability and uncertainty to it.

Imagine a trend leading to a future scenario

So, if you imagine any variable that you’re interested in, in your life or business, and how it moves over time, this could be anything from:

- the price of a commodity on the market.

- What the sales of a product are

- Intake of an ingredient or food product by a consumer.

If you consider this moving over time, you can imagine a trend like this. You can only see this trend looking backward. That is, you can only see what trend the variable followed after the fact, looking backward.

So, from the perspective of a point in the future, you can look back and see how a variable, metric, or KPI of interest to you has performed over time.

Past performance is no guarantee of future results.

Looking Forward

If you are looking forward from today, your variable’s path is less predictable. The variable may move up or down over time, depending on lots of different pressures and constraints that act upon it. These influences could be from the market, weather, climate, people’s decisions, the media, etc., whatever it might be that can affect your variable. Many systems are chaotic in that the results are sensitive to small changes in initial conditions.

So, what the future looks like is more like this:

And the farther into the future you go, the more this variable can diverge from today’s starting point. We can use various mathematical methods (or probabilistic methods) to allow us to model these systems over time. So, for future scenarios, you can model these using probabilistic or Markov Chain methods, where the variable stochastically moves into the future based on probabilities or constraints that you define.

In the mathematical model, the more paths you simulate, the more resolution you can achieve for your future state. This methodology can account for variability and uncertainty in your key metrics or variables for your system.

Future Scenarios with Uncertainty

And what happens is that you don’t have just one prediction for the future, but you have a distribution of potential outcomes.

The variable will have an expected value around the mean or the 50th Percentile (median) and a high value or low value that typically, we might use a percentile like the 95th percentile (P95) estimate of the value of your variable of interest or a P05 low value. So if you’re looking at the sales of a product in your business, you can say, well, I expect this amount of sales (the Expected Value), but I have a chance of getting these higher level of sales (P95), and in the worst case scenario, I might get this, low value of sales (P05). So, you now have a better understanding of the range or the risk of your future scenario.

You have a distribution of outcomes with expected values and related probabilities for your future scenario.

Models with big data and Probabilistic input Parameters

When we’re calculating these types of models, we need to be able to characterize our input variables using distributions or raw data or ranges.

When Creme Global is creating models, we develop them such that we can enter input values as discrete point values (decimal numbers), but we can also input mathematical expressions to represent probability distributions or ranges. We can specify that a variable or the range of a variable can vary between A and B, using a Uniform(A,B) distribution, for example, or that the variable follows a Gaussian or Log-Normal distribution. We can use a Triangular distribution with a min, max, and most likely value. We have a lot of experience in defining variables and coming up with sensible approaches to represent uncertainty and variability in a model. You can also load all of your raw data into the model to specify an empirical distribution from your data that can be used in the scenario modeling.

Deterministic Calculations

When you consider a deterministic calculation, these are the typical standard calculations you do in applications such as Excel. You have standard, deterministic input data, and you have a calculation that produces a deterministic output.

Often, when people are faced with uncertainty and variability in their systems, they revert their data to summary statistics and then use a deterministic Excel calculation to calculate the output. This can lead to a risk arising from the “flaw of averages”.

“We have to be careful of the flaw of averages”.

Uncertainty and risk – The Flaw of Averages

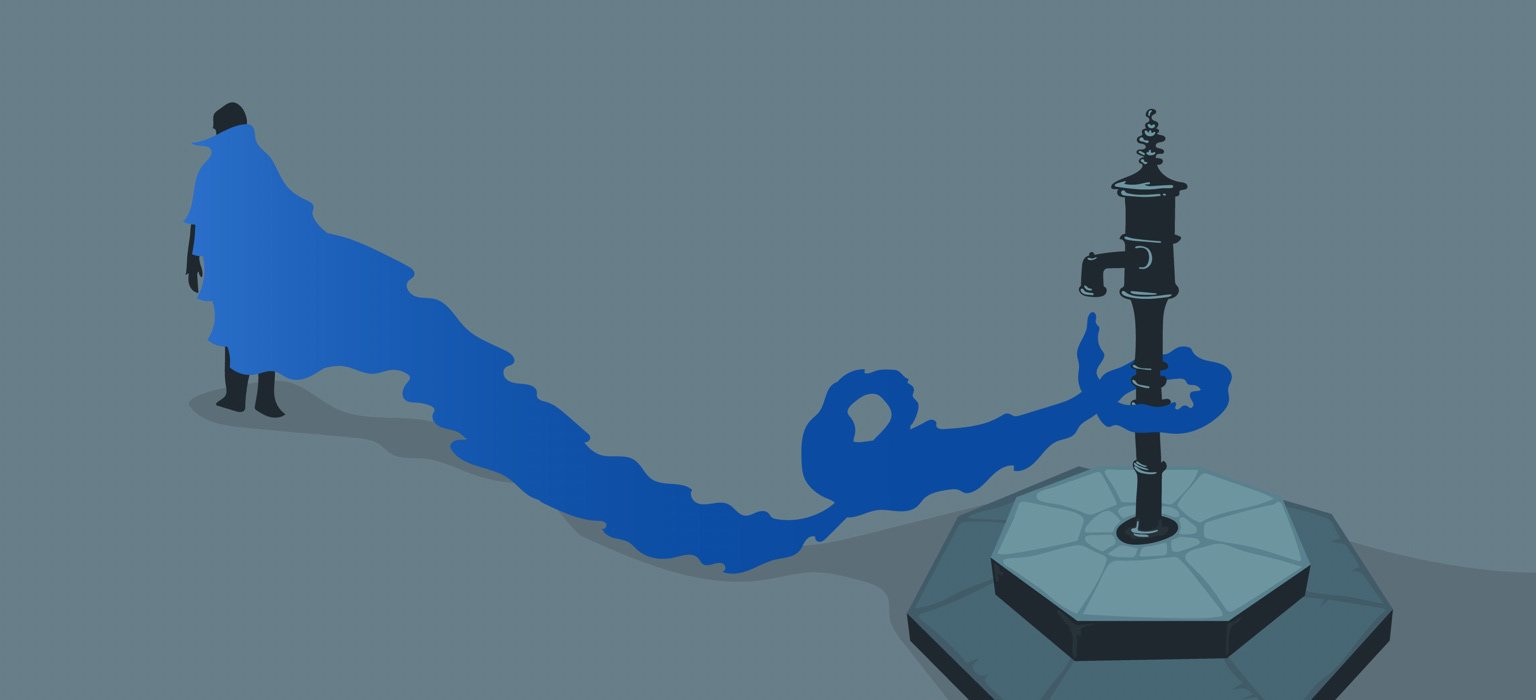

If we just calculate something from the average value for each input, we lose perspective on the potential ranges of each input and the resulting impact on the range of outputs from the system. This can lead to bad decisions based on the flaw of averages, illustrated here. The average depth may be one meter but that doesn’t mean it’s safe to wade across the river.

Multivariate Data?

So far, we’ve just been considering individual variables, but to further complicate things, normally systems will be multivariate rather than a single variable.

So imagine now that your data has multiple dimensions; these could be related or independent variables. For example, your system could involve production time, energy cost, quality metrics, volume, lead time, and shelf life. You may have an insight into each of these variables from the historical data you have collected.

Your planning now needs to take multivariate probabilistic variables into account. Your mathematical models need to be capable of handling multiple probabilistic variables. You can use various mathematical methods, for example, machine learning or Monte Carlo-based predictive analytics. When you need to start incorporating these more advanced methods, spreadsheet programs such as Excel are no longer capable of dealing with this level of data or sophistication in model inputs and outputs.

Machine Learning

Machine learning methods can help. You can apply many different techniques from supervised or unsupervised machine learning methods. Using machine learning, you can, for example, figure out groupings or clusters of data, scenarios, or subsets of the data within your multivariate data. These sub-groups could lead you to the most probable scenarios, the highest risk, or even the most profitable scenarios for your business.

Machine learning can help you to organize data – that is fairly hard to understand – into various clear scenarios or clusters, and you can start to understand which are the most important or most relevant for your business.

Data is key

Data is crucial to this type of activity. Data is needed to inform all of your inputs, so you need to have a method of gathering, structuring, and storing these data sets across your business. Data storage is cheap, so you should store all parameters that you think could be important.

Sometimes, parameters that you think might not be important may be quite influential in various aspects of the outputs that you care about.

Probabilistic modeling calculations

Machine learning can help deal with these multiple streams of data, and different methods can then intake distributions, raw data, or data files. Different types of methods, from Monte Carlo to Machine Learning, can generate structured output data, which you can view in graphical form to understand potential trends, correlations, and influencers that may not be apparent in the raw data.

Analysis

The analysis that you need to carry out to make the most of this data has to account for the uncertainty, variability, trends, scenarios, correlations, risks, and potential rewards contained within your data set.

Case study: AI for food hazard identification

In this case study, I discuss work we have done using AI and a “Data Trust” approach to allow organizations to share data that can identify food hazards and authenticity issues in the supply chain.

Ingredient Supply Chain

The food supply chain It’s very dynamic and extensive. It is international and has many moving parts and events can influence the food supply and cause risk.

Retailers and food manufacturers are very conscious of food safety and try to minimize risk and authenticity concerns arising from food fraud in their supply chains. So, what kind of data can we bring to bear on this question?

fiin

In the fiin project, there are over 60 companies and retailers working together to share data and information in a secure, anonymized way using a Data Trust hosted by Creme Global. A Data Trust is a secure technology platform that organizations use to share data, plus, a legal agreement on the ownership and allowed uses of the submitted data.

The key information they are sharing is on detections of authenticity concerns in their supply chain which come from lab-based measurements on products. We then aggregate this data and use it to help them understand trends and ultimately predict risk.

On different types of information to have on testing, the results they’re seeing, the insights they’re getting, they’re sharing this data across a network called the fiin Food Industry Intelligence Network. And we’re facilitating that using a private and secure data trust that we provide as a company.

How the Data Trust works

Creme Global has deployed a secure portal system where organizations can securely and anonymously upload data on the testing programs they’re carrying out and their food supply chain.

We supplement the uploaded data with public data, including government-published information, food intake data, and EU food safety alerts. All of that is structured and combined in a Data Mart, which then can be used for predictive analytics and visualization. Secure dashboards are deployed, which each organization participating in this program has access to. Participating organisations use these dashboards to compare their performance with the industry aggregate data as well as being able to spot trends and risks that would not be apparent to them from their data alone.

Data collection

Participants can upload the data in many ways. They can use APIs or a portal where they can log in and upload data in a fairly standard format like Excel, CSV, XML, or JSON files. As we can see here, there are various user-friendly ways of submitting data.

They can also have a two-step approval process where one person is allowed to upload data, and then that data is reviewed and submitted to the master database by an authorized user.

Visualizing the data

The following slide does not contain actual data but is a demonstration of the type of visualization that is possible from aggregated data. This slide shows the kinds of information that can be gleaned from food safety data and illustrates how risks and trends can be highlighted in different regions.

Moving from trends to likely scenarios – Data and analytics

So, moving from trends to likely scenarios means that we’re moving from basic calculations and simple data sets from using tools like Excel into more sophisticated methods using databases and code. Databases allow us to manage a secure data trust system, aggregate large data sets of millions of records, and then connect to software code running mathematical models on that data, which can help us identify and inform future scenarios.

These techniques then allow us to understand key influencers within those scenarios.

And then help us inform future scenarios that can be the most profitable, least risky, etc. for your business.

A key to this is getting your data in order, trying to track and store all of the data that may influence your system or business, and sharing data between organizations or within your organization as required.

Structuring that data into a more sophisticated data system, like a database or a data trust, and then running Python code (Python is a software language that has many machine learning and probabilistic method libraries) upon that data is the path to moving from simple calculations and visualizations to more powerful machine learning methods that discover trends and scenarios from your data.

And that’s how we transition from simple trends forecasting to future scenarios using big data and AI.

Organizational Culture

In an increasingly interconnected global food industry, organizations must not only maintain internal standards but also foster collaboration with external partners to enhance food safety. A strong organizational culture that embraces innovation and cross-industry cooperation can lead to significant advancements in risk forecasting and mitigation. By integrating predictive analytics with real-time data sharing, organizations can proactively identify potential risks across the supply chain. This collaborative approach not only strengthens food safety protocols but also creates a network of shared responsibility, where producers, processors, and supply chain partners work in tandem to uphold safety standards. As regulations become more stringent, adopting such data-driven strategies enables organizations to stay ahead of compliance requirements, ensuring they can effectively manage risks and seize new opportunities in the market.

About Creme Global

Creme Global is a company that has a strong technology platform for aggregating and organizing data, which then can be used in predictive models, either from the risk modeling approach through to machine learning, predictive analytics, and visual analytics using dashboards. We use a scientific approach to our predictive analytics, computing, and machine learning methods.